Why Parse the User-Agent? |

November 22nd, 2014 |

| tech |

User-Agent:

"UA strings need to die a horrible horrible death."

— UnoriginalGuy"The UA string is flawed by itself. it shouldn't even be used anymore. The fact that browser manufacturers have to include all sorts of stuff is proof that this system doesn't work."

— guardian5x"Well written sites use feature detection, not user-agent detection."

— Strom"We'd all be better off if they just stopped sending the UA string altogether"

— nly

The modern advice is to use feature detection. Instead of the server

intepreting the User-Agent header to guess at what features

the browser supports, just run some JavaScript in the browser to see

if the specific feature you need is supported. When this fits your

situation this is great, but it's almost always slower. Many times

it's not enough slower to matter, just a few more lines of JavaScript,

but let's look at a case where the performance issues are substantial.

Let's say I want to show you a picture of a kitten:

How big is that? [1] It depends how we encode it:

| Unoptimized JPEG | 290.0 kB |

| Optimized JPEG | 38.6 kB |

| Optimized WebP | 20.2 kB |

<img id=img>

<script>

var img = document.getElementById("img");

if (SupportsWebP()) {

img.src = "image.webp";

} else {

img.src = "image.jpg";

}

</script>

First the browser downloads the HTML, then it runs the javascript, and

then depending on the value of SupportsWebP() [2] it either

loads image.webp or image.jpeg. What's inefficient

about this? How is this worse than just handling <img

src="image.webp">?

The problem is this breaks the preload scanner. Technically, the browser is supposed to make its way through the web page piece by piece, handling each bit as it comes to it. For example, if it gets to some external JavaScript, it's supposed to fetch and run that script before continuing on with anything else. To load your page faster, however, your browser cheats. While it's waiting for that script to load, it looks ahead through the rest of the page for resources it thinks it's going to need and fetches them. And, critically, that scanner doesn't run javascript.

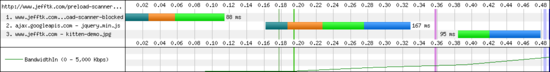

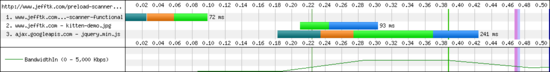

Here are two example pages, containing a single external script and an

image: one loads the image with JavaScript and one uses an ordinary

img tag. Running these both through WebPageTest (1,

2),

here are charts showing how the browser loaded the page:

You can see that in the JavaScript case the browser loaded everything

in order while when using an img tag the two files could be

loaded in parallel. [3]

To emit html that references either a JPEG or a WebP depending on the

browser, you need some way that the server can tell whether the

browser supports WebP. Because this feature is so valuable, there is

a standard way of indicating support for it: include

image/webp in the Accept header. Unfortunately this

doesn't quite work in practice. For example, Chrome v36 on iOS broke support for WebP images

outside of data:// urls but was still sending Accept:

image/webp. Similarly, Opera added image/webp to their

Accept header before they supported WebP lossless. And no

one indicates in their Accept whether they support animated

WebP.

This leaves us having to look at the User-Agent header to

figure out what the browser is, and then look up what features that

browser supports. The header is ugly, I hate having to do this, but

if we want to make pages fast we need to use the UA.

(The full gory details: kernel/http/user_agent_matcher.cc.)

[1] I uploaded this picture to my server as a poorly optimized jpeg, but

I'm running PageSpeed.

You should be seeing WebP if your browser supports it, or an optimized

JPEG if it doesn't.

[2] Which would be a bit of an awkward function.

[3] This only is a problem because of the external script reference. If there were nothing to block the regular parser then both versions would be just as good. (1, 2) Most pages do reference external scripts, however, so in practice the preload scanner helps a lot and you don't want to disable it.

Comment via: google plus, facebook, hacker news, substack